Periodic pattern mining, an example with Canadian TV programs¶

[1]:

import skmine

print("This tutorial was tested with the following version of skmine :", skmine.__version__)

print("""

Be sure to execute this notebook in the dir `docs/tutorials/periodic`,

to be able to load the data files `.csv`.

If not, please do so and adapt the bash commands below

""")

%pwd

# %cd docs/tutorials/periodic

This tutorial was tested with the following version of skmine : 1.0.0

Be sure to execute this notebook in the dir `docs/tutorials/periodic`,

to be able to load the data files `.csv`.

If not, please do so and adapt the bash commands below

[1]:

'/home/hcourtei/Projects/F-WIN/scikit-mine/codes/skMineDev/docs/tutorials/periodic'

The problem, informally¶

Let’s take a simple example.

Imagine you set an alarm to wake up every day around 7:30AM, and go to work. Sometimes you wake up a bit earlier (your body anticipates the alarm), and sometimes a bit later, for example if you press the “snooze” button and refuse to face the fact that you have to wake up.

In python we can load those “wake up” events as logs, and store them in a pandas.Series, like

[2]:

import datetime as dt

import pandas as pd

one_day = 60 * 24 # a day in minutes

minutes = [0, one_day - 1, one_day * 2 - 1, one_day * 3, one_day * 4 + 2, one_day * 7]

S = pd.Series("wake up", index=minutes)

start = dt.datetime.strptime("16/04/2020 07:30", "%d/%m/%Y %H:%M")

S.index = S.index.map(lambda e: start + dt.timedelta(minutes=e))

S.index = S.index.round("min") # minutes as the lowest unit of difference

S

[2]:

2020-04-16 07:30:00 wake up

2020-04-17 07:29:00 wake up

2020-04-18 07:29:00 wake up

2020-04-19 07:30:00 wake up

2020-04-20 07:32:00 wake up

2020-04-23 07:30:00 wake up

dtype: object

[3]:

S.index.to_numpy()

[3]:

array(['2020-04-16T07:30:00.000000000', '2020-04-17T07:29:00.000000000',

'2020-04-18T07:29:00.000000000', '2020-04-19T07:30:00.000000000',

'2020-04-20T07:32:00.000000000', '2020-04-23T07:30:00.000000000'],

dtype='datetime64[ns]')

We can see the wake-up time is not exactly the same every day, but overall a consistent regular pattern seems to emerge.

Now imagine that in addition to wake up times, we also have records of other daily activities (meals, work, household chores, etc.), and that rather than a handful of days, those records span several years and make up several thousands of events

Can you automatically identify regularities to better understand your daily routine?

Introduction to periodic pattern mining¶

Periodic pattern mining aims at exploiting regularities not only about what happens by finding coordinated event occurrences, but also about when it happens and how it happens. It’s about not just finding things that happen repeatedly, but that happen regularly, with a consistent periodicity.

Next, we introduce the concept of cycles

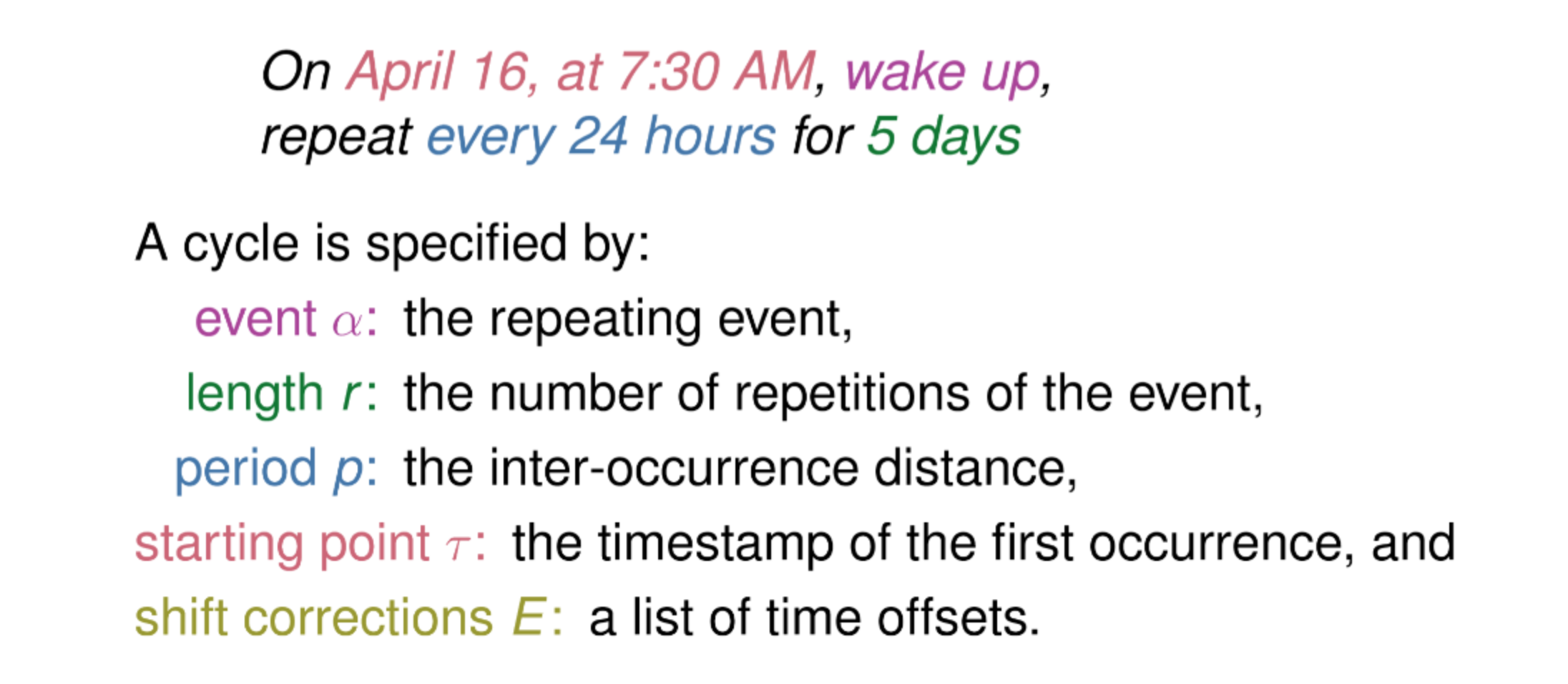

The cycle : a building block for periodic pattern mining¶

Here is an explicit example of a cycle

This definition, while being relatively simple, is general enough to allow us to find regularities in different types of logs

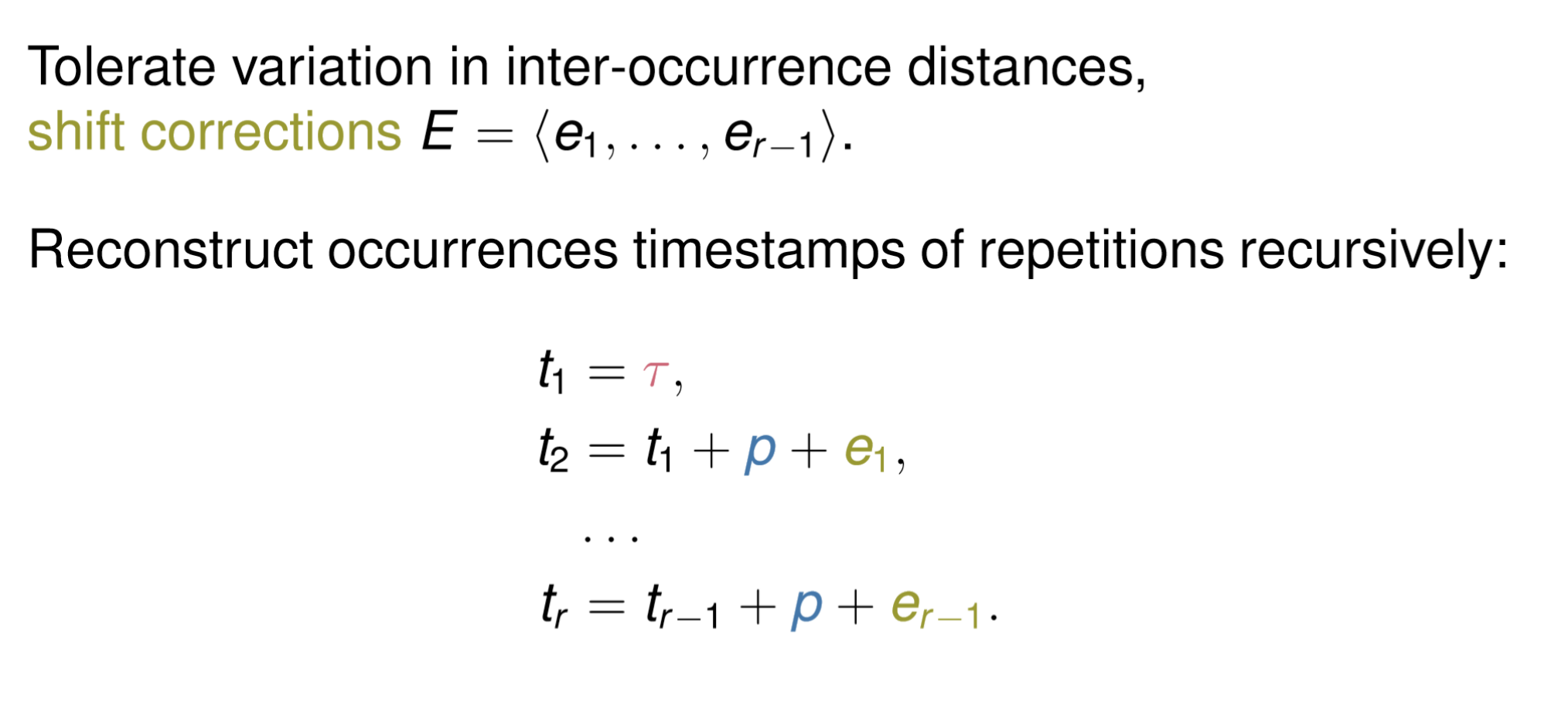

Handling noise in our timestamps¶

Needless to say, it would be too easy if events in our data were equally spaced. As data often comes noisy, we have to be fault tolerant, and allow small errors to sneak into our cycles.

That’s the role of **shift corrections*, which capture the small deviations from perfectly regular periodic repetitions, and allow to reconstruct the (noisy) original sequence of events, using the following relation

A tiny example with scikit-mine¶

scikit-mine offers a PeriodicPatternMiner, out of the box. You can use it to detect regularities, in the form of (possibly nested) cycles, which we call periodic patterns.

These patterns are evaluated using a MDL criterion, to help us extract a compact and representative collection of patterns from the data, trying to avoid redundancy between patterns, in particular.

[4]:

from skmine.periodic import PeriodicPatternMiner

pcm = PeriodicPatternMiner().fit(S)

pcm.transform(S)

[4]:

| t0 | pattern | repetition_major | period_major | sum_E | |

|---|---|---|---|---|---|

| 0 | 2020-04-16 07:30:00 | (wake up)[r=5 p=1 day, 0:00:00] | 5 | 1 days | 0 days 00:04:00 |

You can see one cycle has been extracted for our event wake up. The cycle covers the entire business week, but not the last monday separated by the weekend

It has a length of 5 and a period close to 1 day, as expected.

Also, note that we mix some information here. Our period of 1 day offers the best summary for this data. Accessing the little “shifts” as encountered in original data is also possible, with an extra argument in our call : pcm.transform(S,dE_sum=False)

[5]:

pd.set_option('display.max_colwidth', 100)

res_wake = pcm.transform(S,dE_sum=False)

res_wake.E

[5]:

0 [-1 days +23:59:00, 0 days 00:00:00, 0 days 00:01:00, 0 days 00:02:00]

Name: E, dtype: object

The last column named E contains a list of shifts to apply to our cycle in case we want to reconstruct the original data. Trailing zeros have been removed for efficiency, and their values are relative to the period, but we can see there is: * -1 minute shift (-1 days +23:59:00), between the 1st and 2nd entry (waking up at 7:30 on 04-16 at 7:29 on 04-17) * no shift between the 2nd and 3rd entry (still waking up at 7:29 on 04-18) * + 1 minute shift between the 3rd and 4th entry (back to

7:30 on 04-19) * + 2 minutes shift between the 4th and 5th entry (waking up at 7:32 on 04-20)

Events that are not captured by any pattern are called redisuals

[6]:

pcm.get_residuals()

[6]:

| time | event | |

|---|---|---|

| 0 | 2020-04-23 07:30:00 | wake up |

This way pcm does not store all the data, but has all information needed to reconstruct it entirely !!

[7]:

pcm.reconstruct()

[7]:

| time | event | |

|---|---|---|

| 0 | 2020-04-16 07:30:00 | wake up |

| 1 | 2020-04-17 07:29:00 | wake up |

| 2 | 2020-04-18 07:29:00 | wake up |

| 3 | 2020-04-19 07:30:00 | wake up |

| 4 | 2020-04-20 07:32:00 | wake up |

An example with Canadian TV programs¶

Fetching logs from canadian TV¶

In this section we are going to load some event logs of TV programs (the WHAT), indexed by their broadcast timestamps (the WHEN).

PeriodicPatternMiner is here to help us discovering regularities (the HOW)

[8]:

from skmine.datasets import fetch_canadian_tv

from skmine.periodic import PeriodicPatternMiner

Searching for cycles in TV programs¶

Remember about the definition of cycles ? Let’s apply it to our TV programs

In our case

\(\alpha\) is the name of a TV program

\(r\) is the number of broadcasts (repetitions) for this TV program (inside this cycle)

\(p\) is the optimal time delta between broadcasts in this cycle. If a program is meant to be live everyday at 14:00PM, then \(p\) is likely to be 1 day

\(\tau\) is the first broadcast time in this cycle

\(dE\) are the shift corrections between the \(p\) and the actual broadcast time of an event. If a TV program was scheduled at 8:30:00AM and it went on air at 8:30:23AM the same day, then we keep track of a 23 seconds shift. This way we can summarize our data (via cycles), and reconstruct it (via shift corrections).

Finally we are going to dig a little deeper into these cycles, to answer quite complex questions about our logs. We will see that cycles contains usefull information about our input data

[9]:

ctv_logs = fetch_canadian_tv()

ctv_logs.head()

[9]:

timestamp

2020-08-01 06:00:00 The Moblees

2020-08-01 06:11:00 Big Block Sing Song

2020-08-01 06:13:00 Big Block Sing Song

2020-08-01 06:15:00 CBC Kids

2020-08-01 06:15:00 CBC Kids

Name: canadian_tv, dtype: string

Compute only simple cycles on ctv_logs with the karg complex=False :

[10]:

pcm = PeriodicPatternMiner(complex=False).fit(ctv_logs)

pcm.transform(ctv_logs)

/home/hcourtei/Projects/F-WIN/scikit-mine/codes/skMineDev/skmine/periodic/cycles.py:131: UserWarning: found 234 duplicates in the input sequence, they have been removed.

warnings.warn(f"found {diff} duplicates in the input sequence, they have been removed.")

[10]:

| t0 | pattern | repetition_major | period_major | sum_E | |

|---|---|---|---|---|---|

| 20 | 2020-08-01 04:00:00 | (Rick Mercer Report)[r=5 p=7 days, 0:00:00] | 5 | 7 days 00:00:00 | 0 days 00:00:00 |

| 21 | 2020-08-01 04:30:00 | (Rick Mercer Report)[r=5 p=7 days, 0:00:00] | 5 | 7 days 00:00:00 | 0 days 00:00:00 |

| 0 | 2020-08-01 05:00:00 | (Grand Designs)[r=31 p=1 day, 0:00:00] | 31 | 1 days 00:00:00 | 0 days 00:00:00 |

| 59 | 2020-08-01 06:00:00 | (The Moblees)[r=5 p=7 days, 0:00:00] | 5 | 7 days 00:00:00 | 0 days 00:00:00 |

| 29 | 2020-08-01 06:11:00 | (Big Block Sing Song)[r=5 p=7 days, 0:00:00] | 5 | 7 days 00:00:00 | 0 days 00:00:00 |

| ... | ... | ... | ... | ... | ... |

| 254 | 2020-08-26 14:00:00 | (Jamie's Super Foods)[r=3 p=1 day, 0:00:00] | 3 | 1 days 00:00:00 | 0 days 00:00:00 |

| 183 | 2020-08-27 02:00:00 | (Mr. D)[r=4 p=0:30:00] | 4 | 0 days 00:30:00 | 0 days 00:00:00 |

| 2 | 2020-08-28 00:00:00 | (Schitt's Creek)[r=8 p=0:30:00] | 8 | 0 days 00:30:00 | 0 days 00:00:00 |

| 129 | 2020-08-29 06:15:00 | (CBC Kids)[r=23 p=0:12:00] | 23 | 0 days 00:12:00 | 0 days 02:26:00 |

| 126 | 2020-08-30 06:11:00 | (CBC Kids)[r=11 p=0:12:00] | 11 | 0 days 00:12:00 | 0 days 00:49:00 |

278 rows × 5 columns

Compute simple and complex (with horizontal and vertical combinations) cycles on ctv_logs :

[11]:

pcm = PeriodicPatternMiner(complex=True).fit(ctv_logs)

/home/hcourtei/Projects/F-WIN/scikit-mine/codes/skMineDev/skmine/periodic/cycles.py:131: UserWarning: found 234 duplicates in the input sequence, they have been removed.

warnings.warn(f"found {diff} duplicates in the input sequence, they have been removed.")

[12]:

pd.set_option('display.max_colwidth', 100)

cycles = pcm.transform(ctv_logs)

cycles

[12]:

| t0 | pattern | repetition_major | period_major | sum_E | |

|---|---|---|---|---|---|

| 34 | 2020-08-01 04:00:00 | (Rick Mercer Report [d=0:30:00] Rick Mercer Report)[r=5 p=7 days, 0:00:00] | 5 | 7 days 00:00:00 | 0 days 00:00:00 |

| 0 | 2020-08-01 05:00:00 | (Grand Designs)[r=31 p=1 day, 0:00:00] | 31 | 1 days 00:00:00 | 0 days 00:00:00 |

| 7 | 2020-08-01 06:00:00 | (The Moblees [d=0:11:00] Big Block Sing Song [d=0:02:00] Big Block Sing Song [d=0:02:00] CBC Kid... | 5 | 7 days 00:00:00 | 0 days 00:03:00 |

| 19 | 2020-08-01 06:15:00 | (CBC Kids [d=1:40:00] Big Block Sing Song [d=0:02:00] Furchester Hotel [d=0:38:00] Ollie: The Bo... | 5 | 7 days 00:00:00 | 0 days 00:02:00 |

| 95 | 2020-08-01 06:15:00 | (CBC Kids)[r=29 p=0:12:00] | 29 | 0 days 00:12:00 | 0 days 02:54:00 |

| ... | ... | ... | ... | ... | ... |

| 109 | 2020-08-26 14:00:00 | (Jamie's Super Foods)[r=3 p=1 day, 0:00:00] | 3 | 1 days 00:00:00 | 0 days 00:00:00 |

| 86 | 2020-08-27 02:00:00 | (Mr. D)[r=4 p=0:30:00] | 4 | 0 days 00:30:00 | 0 days 00:00:00 |

| 16 | 2020-08-28 00:00:00 | (Schitt's Creek)[r=8 p=0:30:00] | 8 | 0 days 00:30:00 | 0 days 00:00:00 |

| 100 | 2020-08-29 06:15:00 | (CBC Kids)[r=23 p=0:12:00] | 23 | 0 days 00:12:00 | 0 days 02:26:00 |

| 92 | 2020-08-31 07:00:00 | (CBC Kids)[r=17 p=0:13:00] | 17 | 0 days 00:13:00 | 0 days 02:15:00 |

121 rows × 5 columns

Note : no need to worry for the warning, it’s here to notify duplicate event/timestamp pairs have been found

Viewing the patterns¶

Sometimes the patterns are complicated to understand textually. The draw_pattern function allows you to visualise them. Simply indicate in pattern the id of the pattern you wish to view from the results of the previous transform.

[13]:

pcm.draw_pattern(19)

# if you want to save the tree, you can add a `directory` parameter to indicate the location of the results (pdf + generated DOT file)

[13]:

Patterns import - export¶

[14]:

#Store in a json file the patterns ("patterns.json" by default)

pcm.export_patterns(file = "patterns.json")

# reinitialize the PeriodicPatternMiner :

pcm = PeriodicPatternMiner()

# import the json file ("patterns.json" by default)

pcm.import_patterns(file = "patterns.json")

# recompute the pattern :

cycles = pcm.transform(ctv_logs)

cycles

[14]:

| t0 | pattern | repetition_major | period_major | sum_E | |

|---|---|---|---|---|---|

| 0 | 2020-08-01 04:00:00 | (Rick Mercer Report [d=0:30:00] Rick Mercer Report)[r=5 p=7 days, 0:00:00] | 5 | 7 days 00:00:00 | 0 days 00:00:00 |

| 1 | 2020-08-01 05:00:00 | (Grand Designs)[r=31 p=1 day, 0:00:00] | 31 | 1 days 00:00:00 | 0 days 00:00:00 |

| 2 | 2020-08-01 06:00:00 | (The Moblees [d=0:11:00] Big Block Sing Song [d=0:02:00] Big Block Sing Song [d=0:02:00] CBC Kid... | 5 | 7 days 00:00:00 | 0 days 00:03:00 |

| 3 | 2020-08-01 06:15:00 | (CBC Kids [d=1:40:00] Big Block Sing Song [d=0:02:00] Furchester Hotel [d=0:38:00] Ollie: The Bo... | 5 | 7 days 00:00:00 | 0 days 00:02:00 |

| 4 | 2020-08-01 06:15:00 | (CBC Kids)[r=29 p=0:12:00] | 29 | 0 days 00:12:00 | 0 days 02:54:00 |

| ... | ... | ... | ... | ... | ... |

| 116 | 2020-08-26 14:00:00 | (Jamie's Super Foods)[r=3 p=1 day, 0:00:00] | 3 | 1 days 00:00:00 | 0 days 00:00:00 |

| 117 | 2020-08-27 02:00:00 | (Mr. D)[r=4 p=0:30:00] | 4 | 0 days 00:30:00 | 0 days 00:00:00 |

| 118 | 2020-08-28 00:00:00 | (Schitt's Creek)[r=8 p=0:30:00] | 8 | 0 days 00:30:00 | 0 days 00:00:00 |

| 119 | 2020-08-29 06:15:00 | (CBC Kids)[r=23 p=0:12:00] | 23 | 0 days 00:12:00 | 0 days 02:26:00 |

| 120 | 2020-08-31 07:00:00 | (CBC Kids)[r=17 p=0:13:00] | 17 | 0 days 00:13:00 | 0 days 02:15:00 |

121 rows × 5 columns

Now that we have our cycles in a pandas.DataFrame, we can play with the pandas API and answer questions about our logs

Did I find cycles for the TV show “Arthurt Shorts”¶

[15]:

cycles

[15]:

| t0 | pattern | repetition_major | period_major | sum_E | |

|---|---|---|---|---|---|

| 0 | 2020-08-01 04:00:00 | (Rick Mercer Report [d=0:30:00] Rick Mercer Report)[r=5 p=7 days, 0:00:00] | 5 | 7 days 00:00:00 | 0 days 00:00:00 |

| 1 | 2020-08-01 05:00:00 | (Grand Designs)[r=31 p=1 day, 0:00:00] | 31 | 1 days 00:00:00 | 0 days 00:00:00 |

| 2 | 2020-08-01 06:00:00 | (The Moblees [d=0:11:00] Big Block Sing Song [d=0:02:00] Big Block Sing Song [d=0:02:00] CBC Kid... | 5 | 7 days 00:00:00 | 0 days 00:03:00 |

| 3 | 2020-08-01 06:15:00 | (CBC Kids [d=1:40:00] Big Block Sing Song [d=0:02:00] Furchester Hotel [d=0:38:00] Ollie: The Bo... | 5 | 7 days 00:00:00 | 0 days 00:02:00 |

| 4 | 2020-08-01 06:15:00 | (CBC Kids)[r=29 p=0:12:00] | 29 | 0 days 00:12:00 | 0 days 02:54:00 |

| ... | ... | ... | ... | ... | ... |

| 116 | 2020-08-26 14:00:00 | (Jamie's Super Foods)[r=3 p=1 day, 0:00:00] | 3 | 1 days 00:00:00 | 0 days 00:00:00 |

| 117 | 2020-08-27 02:00:00 | (Mr. D)[r=4 p=0:30:00] | 4 | 0 days 00:30:00 | 0 days 00:00:00 |

| 118 | 2020-08-28 00:00:00 | (Schitt's Creek)[r=8 p=0:30:00] | 8 | 0 days 00:30:00 | 0 days 00:00:00 |

| 119 | 2020-08-29 06:15:00 | (CBC Kids)[r=23 p=0:12:00] | 23 | 0 days 00:12:00 | 0 days 02:26:00 |

| 120 | 2020-08-31 07:00:00 | (CBC Kids)[r=17 p=0:13:00] | 17 | 0 days 00:13:00 | 0 days 02:15:00 |

121 rows × 5 columns

[16]:

cycles[cycles["pattern"].apply(lambda x: "Arthur Shorts" in x)]

[16]:

| t0 | pattern | repetition_major | period_major | sum_E | |

|---|---|---|---|---|---|

| 15 | 2020-08-01 10:48:00 | (Addison [d=0:12:00] Arthur Shorts [d=0:13:00] Stella & Sam [d=0:12:00] Wandering Wenda [d=0:34:... | 5 | 7 days 00:00:00 | 0 days 00:00:00 |

| 50 | 2020-08-03 09:35:00 | ((Arthur Shorts [d=0:13:00] Arthur Shorts)[r=5 p=1 day, 0:00:00] [d=0:45:00] (Furchester Hotel [... | 3 | 6 days 23:59:00 | 0 days 00:22:00 |

| 112 | 2020-08-24 09:35:00 | (Arthur Shorts [d=0:13:00] Arthur Shorts [d=0:15:00] Kiri & Lou [d=0:17:00] Furchester Hotel [d=... | 5 | 0 days 23:59:00 | 0 days 00:11:00 |

What are the top 10 longest cycles ?¶

[17]:

cycles.nlargest(10, ["repetition_major"])

[17]:

| t0 | pattern | repetition_major | period_major | sum_E | |

|---|---|---|---|---|---|

| 1 | 2020-08-01 05:00:00 | (Grand Designs)[r=31 p=1 day, 0:00:00] | 31 | 1 days 00:00:00 | 0 days 00:00:00 |

| 6 | 2020-08-01 06:57:00 | (CBC Kids)[r=31 p=1 day, 0:00:00] | 31 | 1 days 00:00:00 | 0 days 00:33:00 |

| 4 | 2020-08-01 06:15:00 | (CBC Kids)[r=29 p=0:12:00] | 29 | 0 days 00:12:00 | 0 days 02:54:00 |

| 105 | 2020-08-22 06:15:00 | (CBC Kids)[r=27 p=0:12:00] | 27 | 0 days 00:12:00 | 0 days 02:14:00 |

| 95 | 2020-08-15 06:15:00 | (CBC Kids)[r=25 p=0:12:00] | 25 | 0 days 00:12:00 | 0 days 02:00:00 |

| 73 | 2020-08-08 06:15:00 | (CBC Kids)[r=23 p=0:11:00] | 23 | 0 days 00:11:00 | 0 days 02:42:00 |

| 119 | 2020-08-29 06:15:00 | (CBC Kids)[r=23 p=0:12:00] | 23 | 0 days 00:12:00 | 0 days 02:26:00 |

| 120 | 2020-08-31 07:00:00 | (CBC Kids)[r=17 p=0:13:00] | 17 | 0 days 00:13:00 | 0 days 02:15:00 |

| 106 | 2020-08-23 06:11:00 | (CBC Kids)[r=12 p=0:13:00] | 12 | 0 days 00:13:00 | 0 days 00:38:00 |

| 68 | 2020-08-07 00:13:00 | (Schitt's Creek)[r=8 p=0:30:00] | 8 | 0 days 00:30:00 | 0 days 00:13:00 |

[18]:

pcm.reconstruct([1])

[18]:

| time | event | |

|---|---|---|

| 0 | 2020-08-01 05:00:00 | Grand Designs |

| 1 | 2020-08-02 05:00:00 | Grand Designs |

| 2 | 2020-08-03 05:00:00 | Grand Designs |

| 3 | 2020-08-04 05:00:00 | Grand Designs |

| 4 | 2020-08-05 05:00:00 | Grand Designs |

| 5 | 2020-08-06 05:00:00 | Grand Designs |

| 6 | 2020-08-07 05:00:00 | Grand Designs |

| 7 | 2020-08-08 05:00:00 | Grand Designs |

| 8 | 2020-08-09 05:00:00 | Grand Designs |

| 9 | 2020-08-10 05:00:00 | Grand Designs |

| 10 | 2020-08-11 05:00:00 | Grand Designs |

| 11 | 2020-08-12 05:00:00 | Grand Designs |

| 12 | 2020-08-13 05:00:00 | Grand Designs |

| 13 | 2020-08-14 05:00:00 | Grand Designs |

| 14 | 2020-08-15 05:00:00 | Grand Designs |

| 15 | 2020-08-16 05:00:00 | Grand Designs |

| 16 | 2020-08-17 05:00:00 | Grand Designs |

| 17 | 2020-08-18 05:00:00 | Grand Designs |

| 18 | 2020-08-19 05:00:00 | Grand Designs |

| 19 | 2020-08-20 05:00:00 | Grand Designs |

| 20 | 2020-08-21 05:00:00 | Grand Designs |

| 21 | 2020-08-22 05:00:00 | Grand Designs |

| 22 | 2020-08-23 05:00:00 | Grand Designs |

| 23 | 2020-08-24 05:00:00 | Grand Designs |

| 24 | 2020-08-25 05:00:00 | Grand Designs |

| 25 | 2020-08-26 05:00:00 | Grand Designs |

| 26 | 2020-08-27 05:00:00 | Grand Designs |

| 27 | 2020-08-28 05:00:00 | Grand Designs |

| 28 | 2020-08-29 05:00:00 | Grand Designs |

| 29 | 2020-08-30 05:00:00 | Grand Designs |

| 30 | 2020-08-31 05:00:00 | Grand Designs |

[19]:

pcm.get_residuals()

[19]:

| time | event | |

|---|---|---|

| 143 | 2020-08-01 01:12:00 | OLYMPIC GAMES REPLAY |

| 287 | 2020-08-01 13:00:00 | Bondi Vet |

| 47 | 2020-08-01 13:30:00 | Basketball |

| 122 | 2020-08-01 15:55:00 | Basketball |

| 347 | 2020-08-01 19:00:00 | Still Standing |

| ... | ... | ... |

| 351 | 2020-08-31 16:00:00 | Escape to the Country |

| 348 | 2020-08-31 17:00:00 | Murdoch Mysteries |

| 409 | 2020-08-31 17:59:00 | News |

| 386 | 2020-08-31 19:00:00 | Hockey Night in Canada |

| 74 | 2020-08-31 23:15:00 | CBC News: The National |

454 rows × 2 columns

what are the 10 most unpunctual TV programs ?¶

For this we are going to : 1. extract the shift corrections along with other informations about our cycles 2. compute the sum of the absolute values for the shift corrections, for every cycles 3. get the 10 biggest sums

[20]:

# full_cycles = pcm.transform(x)

# full_cycles.head()

[21]:

# def absolute_sum(*args):

# return sum(map(abs, *args))

# # level 0 is the name of the TV program

# shift_sums = full_cycles["dE"].map(absolute_sum).groupby(level=[0]).sum()

# shift_sums.nlargest(10)

[22]:

# def absolute_sum(*args):

# return sum(map(abs, *args))

# # level 0 is the name of the TV program

# shift_sums = full_cycles["dE"].map(absolute_sum).groupby(level=[0]).sum()

# shift_sums.nlargest(10)

What TV programs have been broadcasted every day for at least 5 days straight?¶

Let’s make use of the pandas.DataFrame.query method to express our question in an SQL-like syntax

[23]:

# cycles.query('length_major >= 5 and period_major >= 3600', engine='python')

# # cycles.query('length >= 5 and period == "1 days"', engine='python')

What TV programs are broadcast only on business days ?¶

From the previous query we see we have a lot of 5-length cycles, with periods of 1 day. An intuition is that these cycles take place on business days. Let’s confirm this by considering cycles with 1. start timestamps on mondays 2. periods of roughly 1 day

[24]:

# monday_starts = cycles[cycles.start.dt.weekday == 0] # start on monday

# monday_starts.query('length == 5 and period == "1 days"', engine='python')

Load your own custom datasets¶

fetch_file function.1. **Each line consists of a datetime followed by its associated event.¶

[25]:

with open("dataset_datetime.csv", "r") as f:

data = f.read().splitlines()

data

[25]:

['2020-04-16 07:30:00,wake up',

'2020-04-17 07:29:00,wake up',

'2020-04-17 07:29:00,wake up',

'2020-04-18 07:29:00,wake up',

'2020-04-19 07:30:00,wake up',

'2020-04-20 07:32:00,wake up',

'2020-04-23 07:30:00,wake up']

[26]:

from skmine.datasets.periodic import fetch_file

data = fetch_file("dataset_datetime.csv", separator=",") # by default separator is already comma

pcm = PeriodicPatternMiner().fit(data)

pcm.transform(data)

/home/hcourtei/Projects/F-WIN/scikit-mine/codes/skMineDev/skmine/periodic/cycles.py:131: UserWarning: found 1 duplicates in the input sequence, they have been removed.

warnings.warn(f"found {diff} duplicates in the input sequence, they have been removed.")

[26]:

| t0 | pattern | repetition_major | period_major | sum_E | |

|---|---|---|---|---|---|

| 0 | 2020-04-16 07:30:00 | (wake up)[r=5 p=1 day, 0:00:00] | 5 | 1 days | 0 days 00:04:00 |

Note that several formats for datetimes can be infered from example. But you can force to parse string according to a specific format with the extra option : format="%d/%m/%Y %H:%M:%S" . see https://docs.python.org/3/library/datetime.html#strftime-and-strptime-behavior for all possibilities - 2020-04-23 07:30:00 -> YYYY-MM-DD hh:mm:ss - 1997-07-23T19:20+01:00 -> YYYY-MM-DDThh:mmTZD (TZD = time zone designator) - 1997-07-23T19:20:30.45+01:00 -> YYYY-MM-DDThh:mm:ss.sTZD -

02/23/2018 -> MM/DD/YYYY - 02/23/18 -> MM/DD/YY - …

2 points of caution are to be noted: - Beware of this type of format “Feb 23, 2018” (Mth d, YYYY) because comma is the default separator. If you want to use this format, you must use double quotes around the date. - The MM/DD (Month/day) format is infered by default (when day is less than 12), for DD/MM; use format option as in example below:

[27]:

fetch_file("dataset_datetime_DD_MM.csv", separator=',' ,format="%d/%m/%Y %H:%M:%S")

[27]:

timestamp

2018-02-10 07:30:00 wake up

2018-02-23 07:30:00 coffee

2018-03-10 07:30:00 wake up

Name: dataset_datetime_DD_MM.csv, dtype: string

2. Datetimes can also be replaced by integers.¶

Example:

[28]:

with open("dataset_integer.csv", "r") as f:

data = f.read().splitlines()

data

[28]:

['1,wake up',

'3,wake up',

'10,wake up',

'30,wake up',

'40,wake up',

'70,wake up',

'100,wake up']

3. Use only the name of the events.¶

[29]:

with open("dataset_no_index.csv", "r") as f:

data = f.read().splitlines()

data

[29]:

['wake up', 'wake up', 'wake up', 'wake up', 'wake up', 'wake up', 'wake up']

[30]:

data = fetch_file("dataset_no_index.csv")

data

[30]:

timestamp

0 wake up

1 wake up

2 wake up

3 wake up

4 wake up

5 wake up

6 wake up

Name: dataset_no_index.csv, dtype: string

References¶

Galbrun, E & Cellier, P & Tatti, N & Termier, A & Crémilleux, B “Mining Periodic Pattern with a MDL Criterion”

Galbrun, E “The Minimum Description Length Principle for Pattern Mining : A survey”

Termier, A “Periodic pattern mining”